|

I am a sixth year PhD student of Computer Sciences at UW-Madison advised by Prof. Vikas Singh. My broad research interests are in Machine Learning and its applications to real world problems. I am specifically interested in studying the interplay of concepts from applied math such as differential equations, operators, etc. with strong function approximation properties of neural networks prevalant in Deep Learning. Before coming to Madison I was part of Adobe Acrobat Reader team. Even before I was a Research Intern at the BigData Experience Lab of Adobe Research where I worked with an amazing mentor, Dr. Ritwik Sinha. As an undergrad I spent four amazing years, at the Indian Institute of Technology (IIT) Kharagpur from where I graduated with a B.Tech (Hons.) in Computer Science and Engineering. I was fortunate enough to be advised by Prof. Pabitra Mitra during my undergraduate studies. Purulia’s red soil shaped my childhood, one cricket game at a time. Email / Twitter / GitHub / Resume / Google Scholar / LinkedIn |

|

|

Sep 25: New paper on learnable sampling for PINNs got accepted to NeurIPS 2025! June 24: New paper on Implicit Representations got accepted to ICML 2024! March 24: Our work on sampling of temporal trajectories is out on ArXiv. |

|

|

|

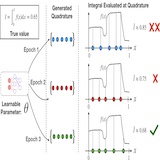

Sourav Pal, Kamyar Azizzadenesheli , Vikas Singh NeurIPS 2025 The growing body of work on Physics-Informed Neural Networks (PINNs) seeks to use machine learning strategies to improve methods for solution discovery of Partial Differential Equations (PDEs). While classical solvers may remain the preferred tool of choice in the short-term, PINNs can be viewed as complementary. The expectation is that in some specific use cases, they can even be effective, standalone. A key step in training PINNs is selecting domain points for loss evaluation, where Monte Carlo sampling remains the dominant but often suboptimal in low dimension settings, common in physics. We leverage recent advances in asymptotic expansions of quadrature nodes and weights (for weight functions belonging to the modified Gauss-Jacobi family) together with suitable adjustments for parameterization towards a data-driven framework for learnable quadrature rules. A direct benefit is a performance improvement of PINNs, relative to existing alternatives, on a wide range of problems studied in the literature. Beyond finding a standard solution for an instance of a single PDE, our construction enables learning rules to predict solutions for a given family of PDEs via hyper-networks, a useful capability for PINNs. |

|

Sourav Pal, Harshavardhan Adepu, Clinton Wang , Polina Golland, Vikas Singh ICML 2024 The idea of representing a signal as the weights of a neural network, called Implicit Neural Representations (INRs), has led to exciting implications for compression, view synthesis and 3D volumetric data understanding. One problem in this setting pertains to the use of INRs for downstream processing tasks. Despite some conceptual results, this remains challenging because the INR for a given image/signal often exists in isolation. What does the neighborhood around a given INR correspond to? Based on this question, we offer an operator theoretic reformulation of the INR model, which we call Operator INR (or O-INR). At a high level, instead of mapping positional encodings to a signal, O-INR maps one function space to another function space. A practical form of this general casting is obtained by appealing to Integral Transforms. The resultant model does not need multi-layer perceptrons (MLPs), used in most existing INR models - we show that convolutions are sufficient and offer benefits including numerically stable behavior. We show that O-INR can easily handle most problem settings in the literature, and offers a similar performance profile as baselines. These benefits come with minimal, if any, compromise. |

|

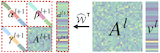

Sourav Pal, Zhanpeng Zeng, Sathya N. Ravi, Vikas Singh ICML 2023 Neural Controlled Differential equations (NCDE) are a powerful mechanism to model the dynamics in temporal sequences, e.g., applications involving physiological measures, where apart from the initial condition, the dynamics also depend on subsequent measures or even a different “control” sequence. But NCDEs do not scale well to longer sequences. Existing strategies adapt rough path theory, and instead model the dynamics over summaries known as log signatures. While rigorous and elegant, invertibility of these summaries is difficult, and limits the scope of problems where these ideas can offer strong benefits (reconstruction, generative modeling). For tasks where it is sensible to assume that the (long) sequences in the training data are a fixed length of temporal measurements – this assumption holds in most experiments tackled in the literature – we describe an efficient simplification. First, we recast the regression/classification task as an integral transform. We then show how restricting the class of operators (permissible in the integral transform), allows the use of a known algorithm that leverages non-standard Wavelets to decompose the operator. Thereby, our task (learning the operator) radically simplifies. A neural variant of this idea yields consistent improvements across a wide gamut of use cases tackled in existing works. We also describe a novel application on modeling tasks involving coupled differential equations. |

|

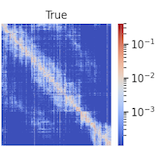

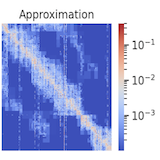

Zhanpeng Zeng, Sourav Pal, Jeffery Kline, Glenn Fung, Vikas Singh ICML 2022 We revisit classical Multiresolution Analysis (MRA) concepts such as Wavelets, whose potential value in this setting remains underexplored thus far. We show that simple approximations based on empirical feedback and design choices informed by modern hardware and implementation challenges, eventually yield a MRA-based approach for self-attention with an excellent performance profile across most criteria of interest. We undertake an extensive set of experiments and demonstrate that this multi-resolution scheme outperforms most efficient self-attention proposals and is favorable for both short and long sequences |

|

Ronak Mehta*, Sourav Pal*, Vikas Singh, Sathya N. Ravi CVPR 2022 Also in UpML 2022 – Updatable Machine Learning Workshop at ICML 2022 Machine Unlearning is the art of removing specific training samples from a predictive model as if they never existed in the training dataset. Recent ideas leveraging optimization-based updates scale poorly with the model dimension d, due to inverting the Hessian of the loss function. We use a variant of a new conditional independence coefficient, L-CODEC, to identify a subset of the model parameters with the most semantic overlap on an individual sample level. Our approach completely avoids the need to invert a (possibly) huge matrix. Our approach makes approximate unlearning possible in settings that would otherwise be infeasible, including vision models used for face recognition, person reidentification and transformer based NLP models. |

|

Ankan Mullick, Sourav Pal, Projjal Chanda, Arijit Panigrahy, Anurag Bharadwaj, Siddhant Singh, Tanmoy Dam TrueFact, SIGKDD, 2019 Deep neural networks to detect facts and opinions from online news media. We have also shown how factuality, opinionatedness and sentiment fraction of different news articles changes over certain events in different time frames. |

|

Sourav Pal*, Tharun Mohandoss*, Pabitra Mitra ICMV, 2018 We present two methods of predicting visual attention maps. The first method is a supervised learning approach in which we collect eye-gaze data for the task of driving and use this to train a model for predicting the attention map. The second method is a novel unsupervised approach where we train a model to learn to predict attention as it learns to drive a car. |

|

Prakhar Gupta, Sourav Pal*, Shubh Gupta* , Ajaykrishnan Jayagopal*, Ritwik Sinha WACV, 2018 We introduce deep learning models for saliency prediction for mobile user interfaces at the element level to improve their usability. |

|

|

|

Saliency Prediction for a Mobile User Interface

Saliency Prediction for Informational Documents |

|

|

|

|

|

Stack Overflow'ed from here |